Been working heads down along with the team for the last couple of months. Our work is mostly around Retrieval-Augmented Generation. Personally, I had a lot of learnings around Machine Learning, especially Large Language Models like GPT.

Part of our work was showcased at Microsoft Build 2023 (Archives for future readers). I’ve listed down sessions where our work was showcased or mentioned. I’ve also listed sessions that I really liked.

Satya’s Keynote - Link

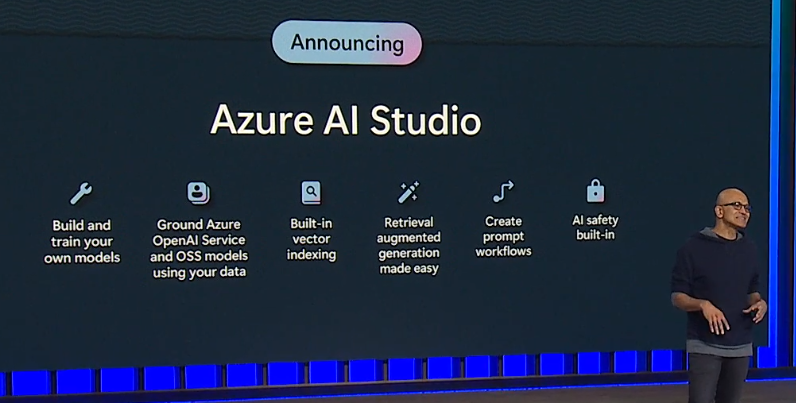

AI dominated the keynote obviously. I recommend watching it to get a high-level idea of what’s in the store in the coming few weeks. There have been multiple teams grinding to release several features. Our team’s work is present on this slide (16:35 mark in the video):

Specifically these areas: Vector indexing, Retrieval Augmented Generation, Prompt workflows.

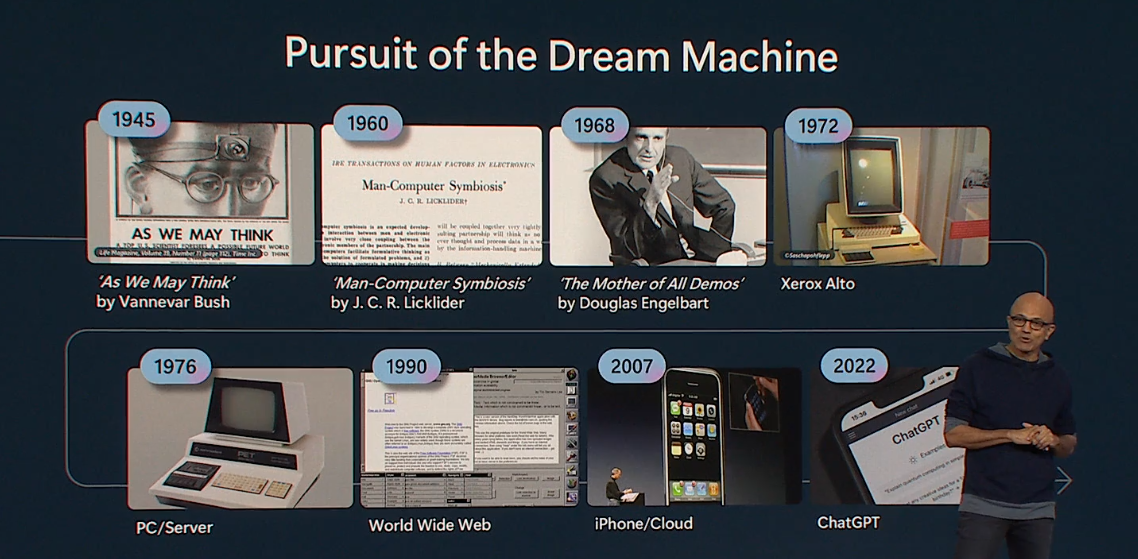

From the keynote, I particularly liked this slide that has historical references that give an idea of what our understanding was of future technology back then:

I’ve linked the first three:

- As We May Think by Vannevar Bush, July 1945

- Man-Computer Symbiosis by J. C. R. Licklider, March 1960

- The Mother of All Demos by Douglas Engelbart, December 1968

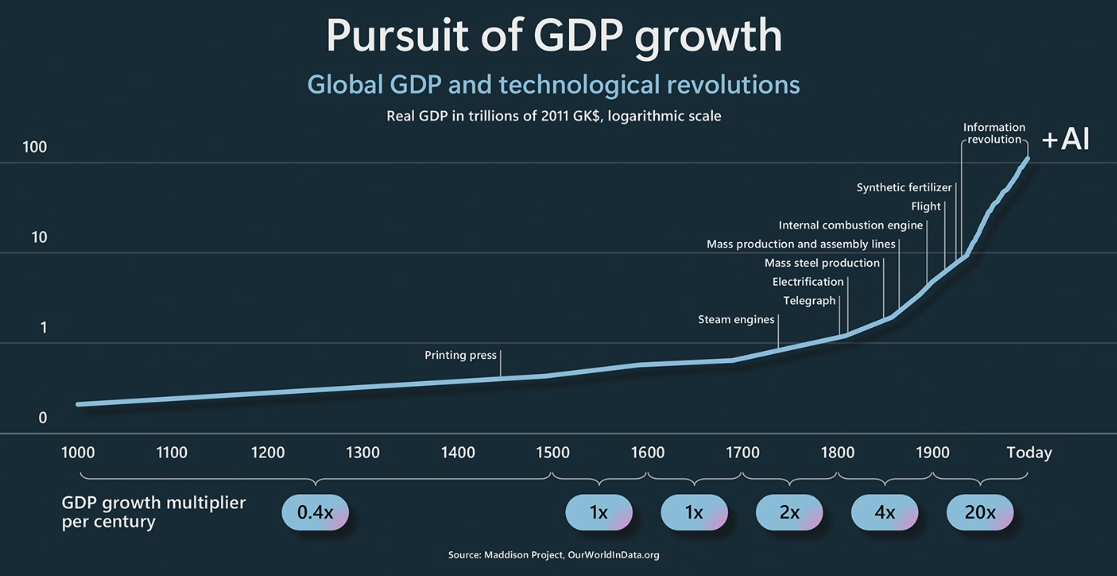

Another interesting slide was a graph around GDP growth with references to revolutionary technological advancements:

Build and maintain your company Copilot with Azure ML and GPT-4 - Link

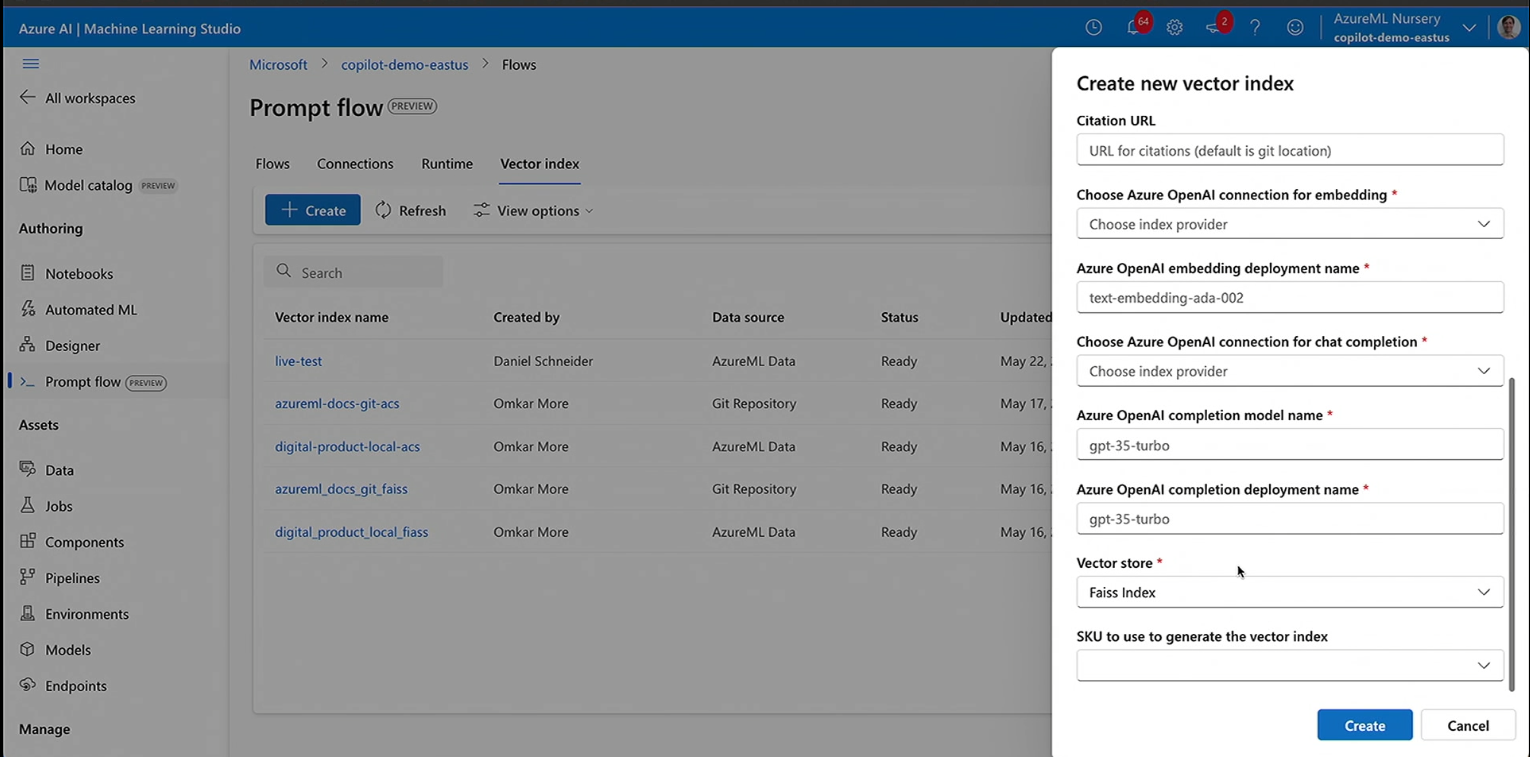

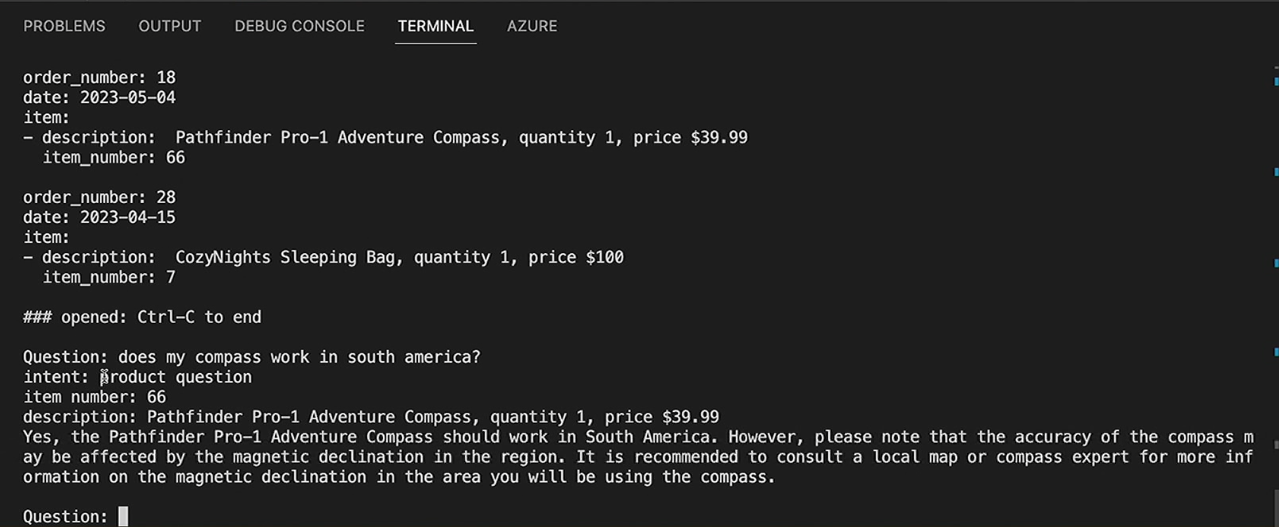

Daniel Schneider and Seth Juarez demoed our team’s work. At 38:00 mark, Daniel shows how to create a Vector Index in PromptFlow:

The full session covers not just our work but also of others around us in AzureML. Great demo session overall. There was some adhoc work I did for Daniel’s Copilot demo. I created a websocket server for his Copilot, and a console client. He demoed it at 19:00 mark:

Looking at the console client Seth remarks: “If it was 1987 and this is the interface we give to our customers…” - me crying

The era of the AI Copilot - Link

Delivered by Kevin Scott (Microsoft CTO), it explains what a Copilot is with a nice demo (code) at 35:30 mark. Greg Brockman (OpenAI Co-founder) was invited on stage for a QnA. Kevin also mentioned Harrison Chase (Creator of LangChain) who was present in the audience. Nice.

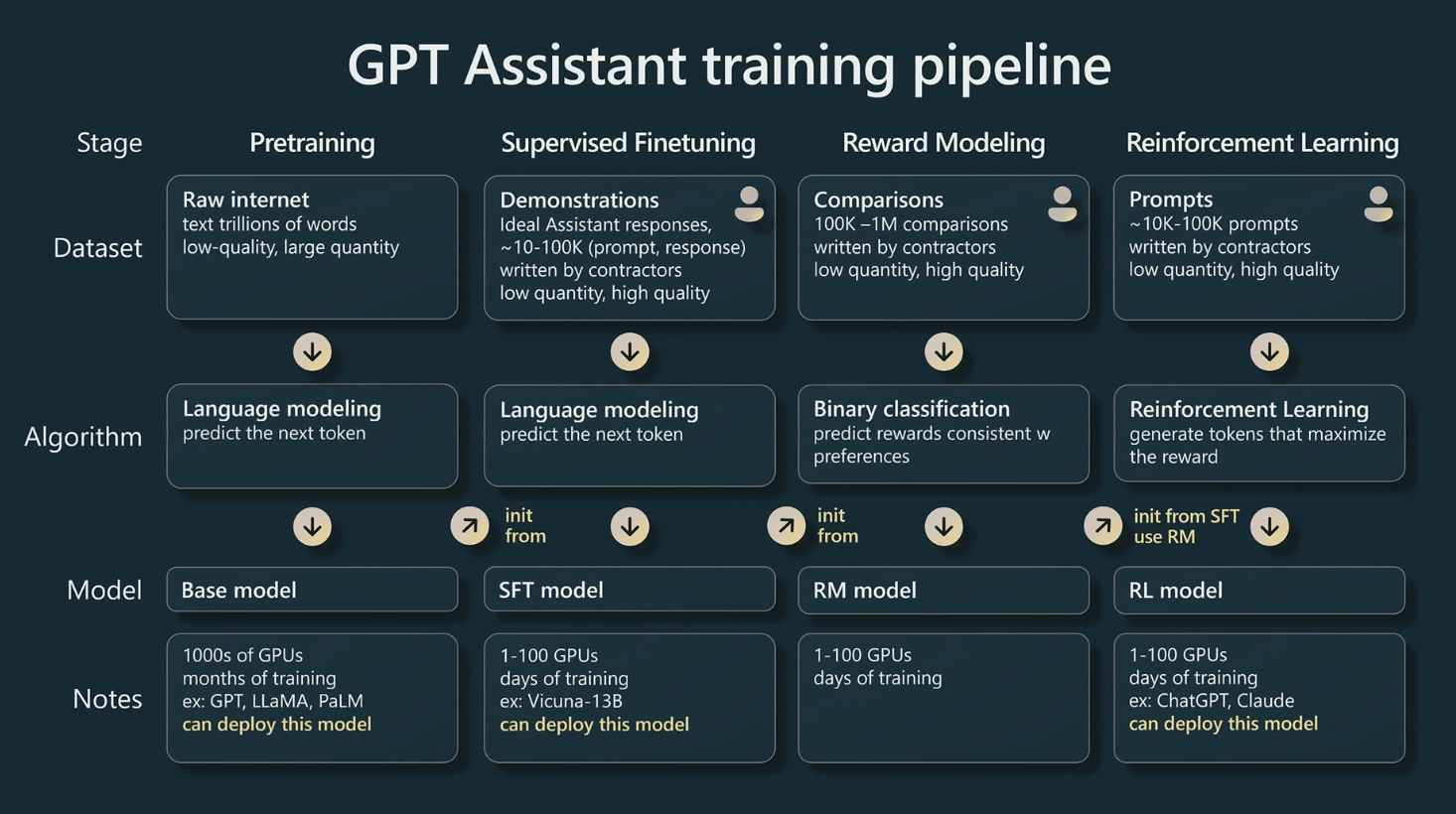

State of GPT - Link

My favorite session from the conference, delivered by Andrej Karpathy from OpenAI. I’ve been following his work for some time now. This session gives you an overview of the ecosystem that has come to exist around ChatGPT. It touches upon things like Chain-of-Thought prompting, AutoGPT, LangChain, etc. The best part is the explanation of how ChatGPT is trained. Deeply technical session. Loved it.

Building AI solutions with Semantic Kernel - Link

I’ve been using LangChain to write LLM based applications. Semantic Kernel by Microsoft is another alternative. A lot of overlaps between the two. Semantic Kernel was originally written in C# and has a Python package now.

Building and using AI models responsibly - Link

Generative models spew all kinds of things. What goes in and comes out of these models will need to be adjusted, for instance, to filter out harmful responses by LLMs. ResponsibleAI works in this area. I feel this problem is big enough that we might see startups focusing on just that.

End

Lot to learn.